Continuous integration, or CI, is a great tool to maintain a healthy code base. As in lint-staged‘s motto, “don’t let 💩 slip into your code base”, CI can run various checks to prevent compilation error, unit test failure, or violation of code style from being merged into the main branch. Besides, CI can also do the packaging work, making artifacts that are ready to be deployed to production. In this article, I’ll demonstrate how to use GitHub Actions to define CI workflow that checks and packages Java/Node/Python applications.

Run Maven verify on push

CI typically has two phases, one is during development and before merging into the master, the other is right after the feature branch is merged. Former only requires checking the code, i.e. build the newly pushed code in a branch, and see if there’s any violation or bug. After it’s merged, CI will run checking and packaging altogether, to produce a deployable artifact, usually a Docker image.

For Java project, we use JUnit, Checkstyle and SpotBugs as Maven plugins to run various checks whenever someone pushes to a feature branch. To do that with GitHub Actions, we need to create a workflow that includes setting up Java environment and running mvn verify. Here’s a minimum workflow definition in project-root/.github/workflows/build.yml:

1 | name: Build |

on: pushdefines the trigger of the workflow. Whenever there’s a new commit pushed to any branch, the workflow will run. You can limit the branches that trigger this workflow, or use some other events likepull_request.verifyis the name of a job we define in this workflow. A workflow can have multiple jobs, we’ll add another one namedbuildvery soon. Jobs are executed in parallel by default, that’s whyjobsis a mapping instead of a sequence. But we can add dependencies between jobs, as well as conditions that may prevent a job from running.- A job consists of severl

steps, here we’ve defined three. A step can either be a command, indicated byrun; or use of a predefined set of code, named “action”, indicated byuses. There’re tons of official and third-party actions we can use to build up a workflow. We can also build our own actions to share in a corporation. - actions/checkout merely checks out the code into workspace for further use. It only checks out the one commit that triggers this workflow. It’s also a good practice to pin the version of an action, as in

actions/checkout@v3. - actions/setup-java creates the specific JDK environment for us.

cache: mavenis important here because it utilizes the actions/cache to upload Maven dependencies to GitHub’s cache server, so that they don’t need to be downloaded from the central repository again. The cache key is based on the content ofpom.xml, and there’re several rules of cache sharing between branches.

Initialize service containers for testing

During the test phase, we oftentimes need a local database service to run the unit tests, integration tests, etc., and GitHub Actions comes with a ready-made solution for this purpose, viz. Containerized services. Here is a minimum example of spinning up a Redis instance within a job:

1 | jobs: |

Before running the verify job, the runner, with Docker already installed, starts up a Redis container and maps its port to the host, in this case 6379. Then any process in the runner can access Redis via localhost:6379. Mind that containers take time to start, and sometimes the starting process is long, so GitHub Actions uses docker inspect to ensure container has entered the healthy state before it makes headway to the next step. So we need to set --health-cmd for our services:

1 | redis: |

This is especially important for the MySQL service we are about to setup, because it usually takes more time to start up:

1 | jobs: |

Share artifacts between jobs

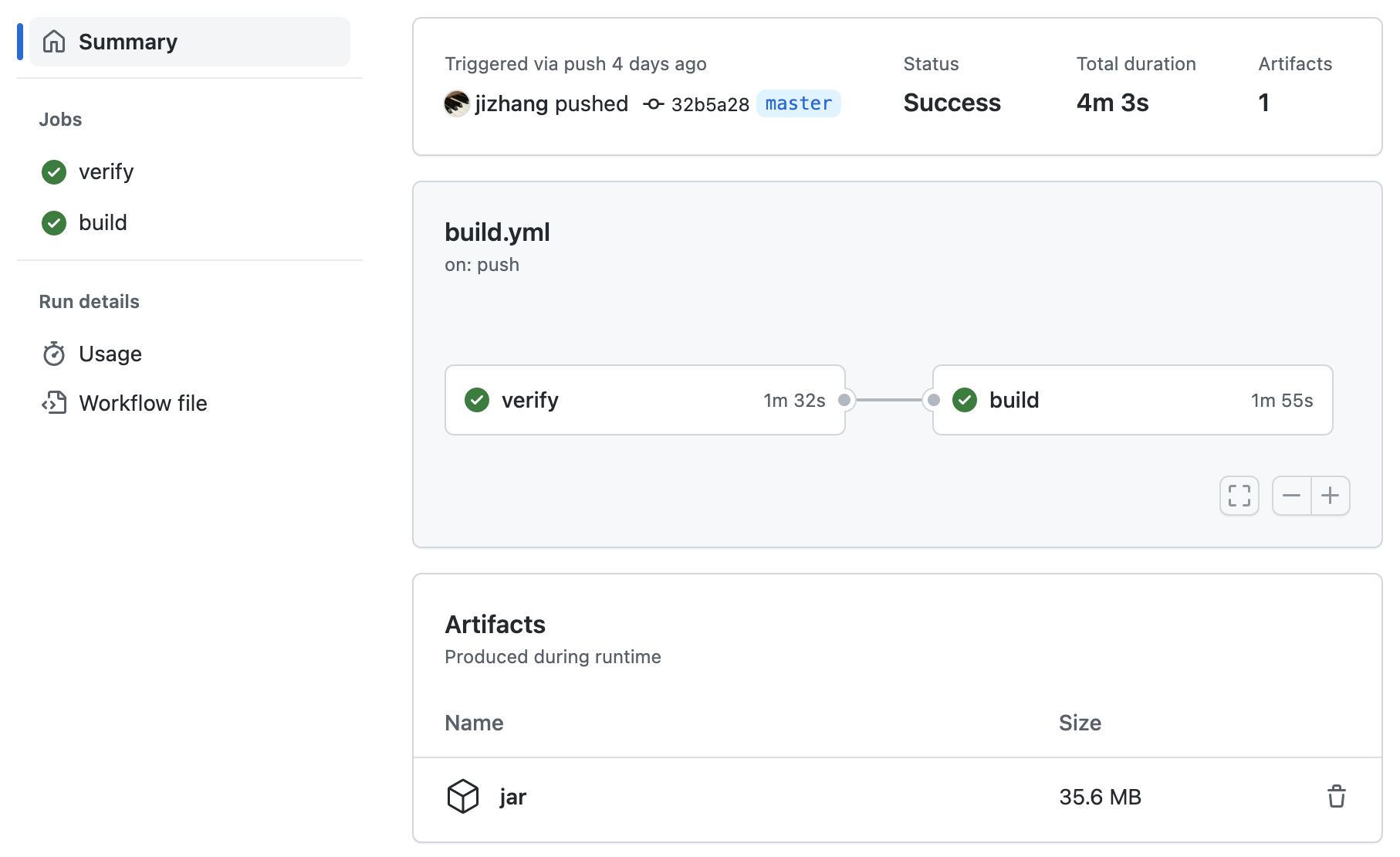

After mvn verify, there’ll be an uber JAR in target/project-1.0-SNAPSHOT.jar, and we want to build it into a Docker image for deployment. We’re going to create a separate job for this task, but the first thing we need to do is to transfer the JAR file from the verify job to the new build job, because jobs in a workflow are executed independently and in parallel, so we also need to tell the runner that build is dependent on verify.

1 | env: |

envis a place to set common variables within workflow. Here we use it for the filename of the JAR. We’ll see more use of it in thebuildjob.actions/upload-artifactand its counterpartdownload-artifactare used to share files between jobs, aka., artifact. It can be a single file or a directory, identified by thename. Artifacts can only be shared within the same workflow run. Once uploaded, they are accessible through GitHub UI as well. There’re more examples in the documentation.needscreates a dependency betweenverifyandbuild, so that they are executed sequentially.

Build Docker image for deployment

Let’s take Spring Boot project for an example. There’re some guidelines on how to efficiently build the packaged JAR into a layered Docker image, with the built-in tool provided by Spring Boot. The full Dockerfile can be found in the above link. One thing we care about is the JAR_FILE argument:

1 | FROM eclipse-temurin:17-jre as builder |

For the build job, the docker CLI is already installed in the runner, but we still need to take care of something like logging into Docker repository, tagging the image, etc. Fortunately there’re some actions for these purposes. Besides, we’re not going to push our image into Docker hub. Instead, we use the GitHub Packages service. Here’s the full definition of the build job:

1 | env: |

ifstatement indicates this job is only executed under certain circumstances. In this case, only run onmasterbranch. There’re other conditions you can use, andifcan also be added instep. Say only upload artifact when theverifyjob is executed onmasterbranch.docker/login-actionsetups the credentials for logging into GitHub Packages. TheGITHUB_TOKENis automatically generated and its permissions can be controlled in the Settings.docker/metadata-actionis used to extract meta data from the repository. In this example, I’m using the Git short commit as the Docker image tag, i.e.sha-729b875, and this action helps me to extract this information from the Git repository and exposes it as the output, which is another feature that GitHub Actions provides for sharing information between steps and jobs. To be more specific:metadata-actionwill generate a list of tags based on theimagesandtagsparameters. The above configuration will generate something likeghcr.io/jizhang/proton:sha-729b875. Other options can be found in this link.- We give this step an

id, which ismeta, and then access its output viasteps.meta.outputs.tags. - The parameter

images,tags, andtagsinbuild-push-actionall support multi-line string so that multiple tags can be published.

docker/build-push-actiondoes the build-and-push job. The Dockerfile should be in the project root, and we pass theJAR_FILEargument which points to the artifact that we’ve downloaded from the previous job.

If built successfully, the Docker image can be found in your Profile - Packages. Here’s the full example of using GitHub Actions with a Java project. The final pipeline looks like this:

Setup CI for Node.js project

Similarly, we create two jobs for testing and building. In the test job, we use the official setup-node action to install specific Node.js version. It also privodes cache facility for yarn, npm package managers.

1 | jobs: |

The build output is generaly in the dist directory, so we just copy it onto an Nginx image and publish to GitHub Packages. I also have a project for demonstration.

1 | FROM nginx:1.17-alpine |

Setup CI for Python project

For Python project, one of the popular dependency management tools is Poetry, and the official setup-python action provides out-of-the-box caching for Poetry-managed virtual environment. Here’s the abridged build.yml, full file can be found in this link.

1 | env: |

But for the build job, Python is different from the aforementioned projects in that it doesn’t produce bundle files like JAR or minified JS. So we have to invoke Poetry inside the Dockerfile to install the project dependencies, which makes the Dockerfile a bit more complicated:

1 | ARG PYTHON_VERSION |

- According to the guidelines, Poetry should be installed in a separate virtual environment. Using

pipxalso works. - For project dependencies however, we install them directly into the system level Python, because this container is only used by one application. Setting

virtualenvs.createtofalsetells Poetry to skip creating new environment for us. - When installing dependencies, we skip the ones for development and include the

gunicornWSGI server. Check out the documentation of Poetry and the sample project’s pyproject.toml file for more information.

References

- https://docs.github.com/en/actions/automating-builds-and-tests/building-and-testing-java-with-maven

- https://docs.github.com/en/actions/publishing-packages/publishing-docker-images

- https://endjin.com/blog/2022/09/continuous-integration-with-github-actions

- https://github.com/vuejs/vue/blob/v2.7.14/.github/workflows/ci.yml